Get Started

Start incorporating high-performance computing today!

Whether you are already well versed in using a supercomputing cluster or just starting out for the first time, we are here to bring you up to speed. Before you begin, we would love to have a conversation with you about your current research goals as well as any potential classroom use you have in mind so we can ensure you have everything need.

Request Access + Training

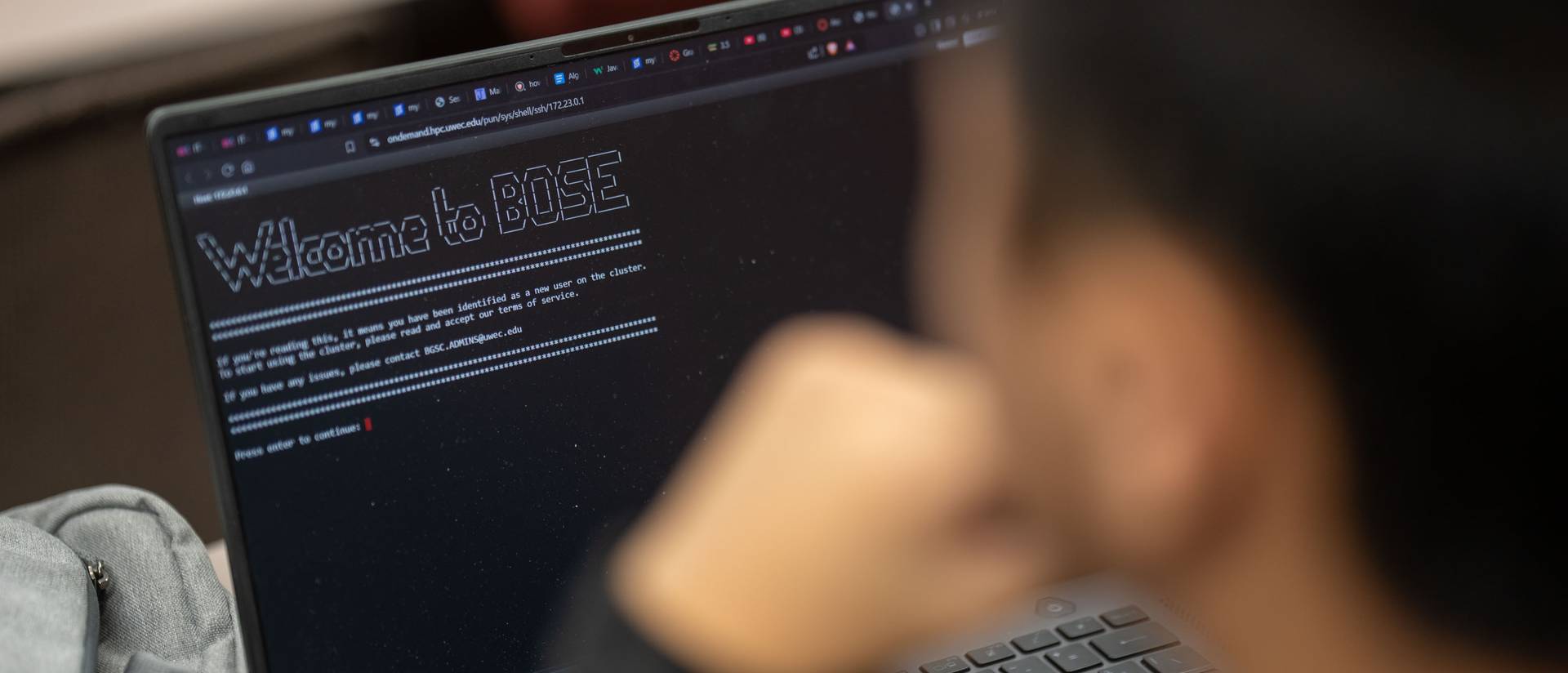

Before you are able to log into our BOSE supercomputing cluster, or use the Open OnDemand system, you'll need to first reach out to us to enable access for your UW-Eau Claire account.

Requesting Access:

Start Form Now (UWEC Login Required)

Don't already have a UW-Eau Claire account? Please contact BGSC.ADMINS@uwec.edu and we'll begin the process of setting up an affiliate account, which will be needed to access any of our supercomputing resources.

Training/Presentations:

We request that all first-time users to our clusters go through a training program, or just a simple conversation, with one of our team members to get you and your group up and running. We'll go over your research, any needs that you may have, and the steps involved in submitting your first job.

We also can scale our trainings and general presentations to match what's needed and can accommodate as small as a 1-on-1 in an office to a full classroom of students. Topics we have covered so far include:

- High Performance Computing / Computational Science

- Blugold Center for High Performance Computing

- Open OnDemand

- Linux / Bash Commands

- Python / Jupyter

- Using Slurm

Access the Cluster

There are two primary methods on accessing our cluster, one is using SSH through a terminal program, while the other is using Open OnDemand through a web browser. Each has their pros and cons depending on the skills and comfortability of the person.

Note: You must be physically on campus or using the campus VPN to access our computing clusters.

Via SSH (Command Prompt / Terminal / PuTTY)

Cluster: BOSE, BGSC

| Hostname | bose.hpc.uwec.edu |

|---|---|

| Port | 50022 |

| Username | UWEC Username - all lowercase without @uwec.edu |

| Password | UWEC Password |

| Password w/ Okta Hardware Token (Starting January 8th) |

UWEC Password,TokenNumber |

| Command | ssh username@bose.hpc.uwec.edu -p 50022 |

Note: If you are on campus and not connected to GlobalProtect you'll also need to authenticate with Okta Verify, or have a hardware token available.

| Hostname | bgsc.hpc.uwec.edu |

|---|---|

| Port | 22 |

| Username | UWEC Username - all lowercase without @uwec.edu |

| Password | UWEC Password |

| Command | ssh username@bgsc.hpc.uwec.edu |

Using a hardware token or code with Okta?

By default, when you connect to our BOSE cluster over SSH, a push notification will be sent to the Okta Verify app on your phone for you to approve.

If you are unable to use your phone and are using a hardware token, you'll have to put the number you receive on the device directly after your password separated with a comma before you can log in.

Example:

Username: myuser

Password: mypass,123456

Open OnDemand

Clusters: BOSE

Open OnDemand is a web platform that provides full access to our BOSE computing cluster in the easy of a web browser. Through it you can transfer and manage your files, use the built-in terminal program, and take advantage of our Jupyter and Desktop integrations.

Submit Job Using Slurm

To run jobs and coordinate the machines they run on, we use the Slurm job scheduling system. To operate, Slurm takes in a list of your requirements (specified in a sbatch .sh script), compares it against the current resource availability on the cluster, and runs the job when a matching node is available.

Slurm Commands

- myjobs - View your current pending and running jobs (custom to UWEC)

- squeue - View all pending and running jobs on the cluster

- savail - View current node usage and availability (custom to UWEC)

- sbatch <script.sh> - Submit job script

- scancel <jobid> - Cancel running job

Example Slurm Script

Below is an example Slurm script you'd create that contains your resource requirements (# CPU Cores, Memory, GPUs) and the commands you want Slurm to run on your behalf, such as Python or Q-Chem.

#!/bin/bash

# ---- SLURM SETTINGS ---- #

# -- Job Specific -- #

#SBATCH --job-name="My Job" # What is your job called?

#SBATCH --output=output.txt # Output file - Use %j to inject job id, like output-%j.txt

#SBATCH --error=error.txt # Error file - Use %j to inject job id, like error-%j.txt

#SBATCH --partition=week # Which group of nodes do you want to use? Use "GPU" for graphics card support

#SBATCH --time=7-00:00:00 # What is the max time you expect the job to finish by? DD-HH:MM:SS

# -- Resource Requirements -- #

#SBATCH --mem=5G # How much memory do you need?

#SBATCH --ntasks-per-node=4 # How many CPU cores do you want to use per node (max 64)?

#SBATCH --nodes=1 # How many nodes do you need to use at once?

##SBATCH --gpus=1 # How many GPUs do you need (max 3)? Remove first "#" to enable.

# -- Email Support -- #

#SBATCH --mail-type=END # What notifications should be emailed about? (Options: NONE, ALL, BEGIN, END, FAIL, QUEUE)

# ---- YOUR SCRIPT ---- #

module load python-libs/3 # Load the Python library / software we want to use

python my-script.py

View Job Results

A job can finish or stop for a variety of reasons such as:

- Completed (Successfully)

- Failed

- Node Failure

- Timeout

- Canceled

When these happen, you'll want to know the status of your job, results of your calculations, or any errors that appear.

Email Notifications:

When you use the "#SBATCH --mail-type=X" option in your Slurm script, you can be alerted to when the job starts, finishes, or fails so you don't have to keep checking in on the job. You can choose multiple email notifications by separating them with a comma, or by using "ALL" to receive all emails.

Options:

- NONE - Don't send any emails

- BEGIN - Send an email when your job starts

- END - Send an email when your job finishes

- FAIL - Send an email when your job fails

- ALL - Send an email when your job starts, finishes, or fails.

By default, emails are sent to your <username>@uwec.edu email account. If you would like to change this to another address, you can add "#SBATCH --mail-user=<email@other.com>" to your Slurm script.

View official docs for mail-type and mail-user

Output Files:

Beyond output emails created from your program itself, Slurm can direct standard output and error text for you to view in real-time. This is the same text as if you were running the program commands manually in the command prompt.

#SBATCH --output=output.txt (Standard Output Text)

#SBATCH --error=error.txt (Standard Error Text)

Tip: Use "%j" in your file name to automatically add the job id. This will allow you to retain past Slurm output logs rather than overwriting them each time.

Resource Usage:

To view how much CPU and memory your job uses, we have the 'seff' command available. Slurm's efficiency program was built to give users a look into how well their job uses the resources they requested after the job stops, however we extended it to support real-time metrics by linking to our Grafana dashboards.

seff <jobid>

Contact the HPC Team

Interested in incorporating high-performance computing in your work but aren't sure where to start? Reach out to the HPC team today and we'll work with you and your group to get everyone up and running.

Contact Us